Report from the Royal Society Web Science conference

I’ve been to the Royal Society once before for an event about understanding risk, and I was surprised to see some of the same people at the Web Science conference. I'm envious that for some people the Royal Society is a way of life. Especially the man who wears two pairs of glasses at the same time and always asks questions from the perspective of torpedo design – I should say that so far as I understand the questions they always appear to be pertinent, so far as they are comprehensible.

You might reasonably ask what Web Science means, ironcically it's question that Google will not help you answer. I'm not sure there is a short answer, but there were strong and consistent links between the speakers so it definitely designates something. In terms what university department Web Science belongs in, it seems to be something of a coalition of disciplines, mainly social science, network mathematics and computer science.

However you triangulate the location of Web Sciencce, it's in an area that I think is very exciting. I hope to have a tiny claim to have played some part in the area through having worked on the BBC’s Lab UK project, which uses the web as social laboratory.

Despite the spectrum of intellectual backgrounds day one was remarkably focused. Other than to call it Web Science the only way I can think of to elucidate the commonality is to use the example of Jon Kleinberg’s talk, which seemed most neatly to encapsulate it. Here goes…

You may have heard of Stanley Milgram for the famous electric shock experiment, but he also did an investigation which gave prominence to the idea of ‘6 degrees of separation’. His ingenious method was to randomly send letters out which contained the name of a target person and short description that target (eg. Jeff Adams, a Boston based lawyer). In the letter there were also instructions indicating that it should be forwarded to someone who might know the target, or know someone who might know someone who would know the target, etc.

Famously the letter will arrive at it’s target in six steps, on average, hence the frequently cited idea that you are six friendships away from everyone in the world (though his experiment was US based).

There’s a strikingly effective way to understand how it can be that the letter finds its destination. It involves imaging the balance between your local friends and your distant friends.

If you only had local friends then a letter would take a large number of steps to find a target individual in, say, Australia. The reason the average can be as low as six steps is that everyone has friends who live abroad, or in another part of the country, so the letter can cover long distances in big hops.

However, imagine all friendships were long distance. If I live in London and I want to get a letter to the lawyer in Boston then I’m going to have a problem. I could send the letter to a friend who lives in Boston, but then his friends are spread equally around the globe, just like mine are. So although the letter can travel great distances it’s course is so unpredictable that no one can tell which direction to forward the letter to get it nearer to it’s target.

It turns out that there is a specific ratio of long and short links which allows the notional letter to get to its destination in the shortest number of links.

This discovery came some time ago, but nobody could measure it the actual ratio of short and long range friends that real people have. To measure it would require a list of millions of people, their location and the names of their friends. Cue Facebook…

Computer scientists have analysed the data on Facebook and it turns out that the actual ratio of short to long links is very close to the optimal ratio, in terms of getting that letter to it’s destination. That is, the mixture of distant contacts and local ones as indicated by the information on Facebook is exactly the right on to deliver the letter in shortest number of links – six.

That’s pretty incredible, and of course it probably isn’t a coincidence. Social scientists posit that perhaps in some way people will their friendships to exhibit this distribution – after all, as we’ve just demonstrated in one sense it’s the most effective mode of linkage. Whatever the eventual explanation, it's a fascinating incite into human behavior.

Stepping back from the specifics of this argument, here is a perfect example of web science: mathematical theory posing a hypothesis (calculating the optimum ration), computer science providing empirical evidence (working out the real world ratio), and then a social scientific search for explanation. It's the combo of these three areas which seems to constitute the "new frontier" described in the title of the Royal Society event.

There are other configurations of the various disciplines. Jennifer Chayes, of Microsoft Research, pointed out that mathematicians like herself will study any kind of network for it’s intellectual beauty. She suggested that a very important role for social scientists was to pose meaningful real-world questions which mathematicians and computer scientists could then collaborate to answer.

The ‘web science approach’ has produced all kinds of exciting results. For example Albert-László Barabási (whose excellent book Bursts I can highly recommend) has used the data to discover that the web is a 'rich get richer' type of network, meaning that is has a distribution of a few highly connected websites (ie. Google) and many less connected web pages (ie. this one) - which it turns out makes it similar to many other types of network. It's by using this kind of understanding of how the web grows naturally that Google can tell a potential spammy website from a real one.

A number of predictions flow from this work which I won’t go into here, but there are plenty of practical results coming out of his work. To prove this he showed a graph of citations for ‘network science’ papers which has peaked recently at 800 a year, compared with approximately 300 for the famous Lorentz attractor paper which more or less defined chaos theory, and even fewer for various other epochal chaos papers. That isn’t surprising, Barabási use examples from yeast proteins to human genomics in his talk - it's much deeper and more widely applicable than just the web.

If you’re still thinking this research might have limited practical application then Robert May’s talk should convince you otherwise. He demonstrated that understanding of ecological networks has spilled over into modeling the extremely real subject of HIV transmission. One of the most ingenious ideas he bought up was that of giving a vaccine for a infectious disease to a population and asking them to administer it to a friend. That means the person with most friends gets the most vaccine. This is handy, because the person with the most friends is also the person most likely to spread the disease.

There were so many other contributions that an exhaustive list of even the most exciting points would also be exhausting to read, so I’ll stop now. But it was an exciting event, not least for the fact that its a genuine intellectual frontier, but one that seems to be surprisingly easy to understand for people who don't work in full time academia, at least in a broad sense.

Monday, September 27, 2010

Tuesday, August 24, 2010

Dr Beard: Or how I learned to stop worrying and love the badinage

Why are people so nervous of the status update? [Published in .net mag]

Mary Beard, Cambridge Professor of Classics, doesn’t like Twitter. You might think that isn’t a surprise – the two things are from different chronological perspectives, but then she does have a successful, if initially reluctant, blog. Only duress from her publicist (or whatever equivalent Cambridge professors have) made her start publishing on the web. Now she describes herself as a convert, and blogging has been transformed in her mind from the basest means of communication to a medium where she can link to research papers and discuss in more depth than she would “even in the Times Literary Supplement”.

I gathered this from her presentation at a conference on improving communication between academia and the public. When she spoke of Twitter she could hardly be more emphatic that she will never use it - hers is a crusade against the tyranny of 140 characters. I imagine she once felt similarly of blogging.

There are few things in the world more over-journalised than Twitter, but no matter what I do I can't pare the following down to less than 700 words - so please accept my apologies and forgive my verbosity, or perhaps blame Professor Beard for provoking me.

If you bother to ask people about their emotional response to status updates you’ll find an undercurrent of antipathy that verges on a rip-tide, and it seems to be Twitter that draws most ire. Even David Cameron has recourse to unparliamentary language when asked his views on the site.

Why is it then that people become upset at the idea of Twitter? One friend who has recently started using the site told me that he felt he’d lost some kind of battle when he gave in to it - why the fight?

The truth is that it’s just small talk. That’s the point of it. If you don’t want to listen to someone’s blatherings then don’t follow them, just as you avoid boring colleagues. If you don’t want to hear any small talk at all then you can always retreat to the desert in the manner of a biblical hermit. Phatic communion is the sacrament that bonds us, and Twitter’s 140 character limit is designed to enforce short messages strengthening social bonds. From all the hostility to Twitter you think that people only spoke in brilliant, lengthy soliloquies, rather than the boring platitudes that are the majority of everyone’s conversation.

Do people worry that they’ll sign up and then discover that they’ve embarrassed themselves by participating in some passing fad?

Or perhaps it’s a misunderstanding about the nature of publishing text. Do we worry that because Twitter is a public medium there is some kind of narcissism and arrogance associated with making your personal trivia available to the world? Some might even see these same qualities in the kind of nerdy early adopters of Twitter and think that being Twitee (I don’t know what the term is, one thing I won’t indulge in with Twitter is it's artificial portmanteau language) says something rather unpleasant about your personality.

Or is it a perceived lack of quality assurance? Do the anti-Twitter demographic think that users lack some kind of quality filter and will sign up to any craze like lambs to the attention span slaughter? If so, I think people should be reassured that cynicism is alive and well you the web – and it fits perfectly well into your allotted 140 characters should you need to express it.

These are the kinds of things people say when they explain their antipathy. But I think they are excuses, the real culprit is unease at conducting an important part of your social life online. Facebook is one thing – we’ve always mediated event invites textually, but to carry out the most mundane social chit-chat on the web is a psychological leap.

Moreover, if you aren’t able to speak to a real world friend on Twitter then it can’t serve you as a small-talk-shop, because the point is primarily to reinforce social bonds, not create them. If you don’t know anyone on the site then it’s the written equivalent of hearing one end of a phone conversation on the bus – which perhaps accounts for the anger that some people express at the medium.

It might not be under Twitter's auspices, but I think the status update is here to stay. Today's unbelievers are just waiting for the social connections to welcome them to the short-messaging congregation.

@marybeard Sorry about calling you a doctor in the title – I know you’re a professor really.

Mary Beard, Cambridge Professor of Classics, doesn’t like Twitter. You might think that isn’t a surprise – the two things are from different chronological perspectives, but then she does have a successful, if initially reluctant, blog. Only duress from her publicist (or whatever equivalent Cambridge professors have) made her start publishing on the web. Now she describes herself as a convert, and blogging has been transformed in her mind from the basest means of communication to a medium where she can link to research papers and discuss in more depth than she would “even in the Times Literary Supplement”.

I gathered this from her presentation at a conference on improving communication between academia and the public. When she spoke of Twitter she could hardly be more emphatic that she will never use it - hers is a crusade against the tyranny of 140 characters. I imagine she once felt similarly of blogging.

There are few things in the world more over-journalised than Twitter, but no matter what I do I can't pare the following down to less than 700 words - so please accept my apologies and forgive my verbosity, or perhaps blame Professor Beard for provoking me.

If you bother to ask people about their emotional response to status updates you’ll find an undercurrent of antipathy that verges on a rip-tide, and it seems to be Twitter that draws most ire. Even David Cameron has recourse to unparliamentary language when asked his views on the site.

Why is it then that people become upset at the idea of Twitter? One friend who has recently started using the site told me that he felt he’d lost some kind of battle when he gave in to it - why the fight?

The truth is that it’s just small talk. That’s the point of it. If you don’t want to listen to someone’s blatherings then don’t follow them, just as you avoid boring colleagues. If you don’t want to hear any small talk at all then you can always retreat to the desert in the manner of a biblical hermit. Phatic communion is the sacrament that bonds us, and Twitter’s 140 character limit is designed to enforce short messages strengthening social bonds. From all the hostility to Twitter you think that people only spoke in brilliant, lengthy soliloquies, rather than the boring platitudes that are the majority of everyone’s conversation.

Do people worry that they’ll sign up and then discover that they’ve embarrassed themselves by participating in some passing fad?

Or perhaps it’s a misunderstanding about the nature of publishing text. Do we worry that because Twitter is a public medium there is some kind of narcissism and arrogance associated with making your personal trivia available to the world? Some might even see these same qualities in the kind of nerdy early adopters of Twitter and think that being Twitee (I don’t know what the term is, one thing I won’t indulge in with Twitter is it's artificial portmanteau language) says something rather unpleasant about your personality.

Or is it a perceived lack of quality assurance? Do the anti-Twitter demographic think that users lack some kind of quality filter and will sign up to any craze like lambs to the attention span slaughter? If so, I think people should be reassured that cynicism is alive and well you the web – and it fits perfectly well into your allotted 140 characters should you need to express it.

These are the kinds of things people say when they explain their antipathy. But I think they are excuses, the real culprit is unease at conducting an important part of your social life online. Facebook is one thing – we’ve always mediated event invites textually, but to carry out the most mundane social chit-chat on the web is a psychological leap.

Moreover, if you aren’t able to speak to a real world friend on Twitter then it can’t serve you as a small-talk-shop, because the point is primarily to reinforce social bonds, not create them. If you don’t know anyone on the site then it’s the written equivalent of hearing one end of a phone conversation on the bus – which perhaps accounts for the anger that some people express at the medium.

It might not be under Twitter's auspices, but I think the status update is here to stay. Today's unbelievers are just waiting for the social connections to welcome them to the short-messaging congregation.

@marybeard Sorry about calling you a doctor in the title – I know you’re a professor really.

Sunday, July 4, 2010

Lost in the noise: what we really think about musical genres

What do we really think about music? I've tried to find some data about how people think about musical genres using the Last FM API.

Ishkur's strangely compelling guide to electronic music is a map of the relationships between various kinds of music, and a perfect example of the incredibly complex genre structures that music builds up around itself. He lists eighteen different sub-genres of Detroit techno including gloomcore, which I suspect isn't for me. I wanted to try and create a similar musical map using data from Last FM.

I've written a bit before about the way in which the web might change the development of genres - what I didn't ask was how important the concept of genre would continue to be. It's difficult to listen to music in a shop, so having a really good system of classification means you have to listen to fewer tracks before you find something you like. Also, in a shop you have to put the CD in a section, so it can only have one genre attributed to it.

But on the web it's easy to listen lots of 30 second samples of music, so arguably you don't need to be so assiduous about categorisation. In addition, the fact that music doesn't have to be physically located in any particular section of a shop also undermines the old system - one track can have two genres (or tags, in internet parlance).

Despite this online music shops like Beatport still separate music into finely differentiated categories, much as you would find in a bricks and mortar record shop. But do they reflect the way people actually think about their musical tastes?

Interestingly, two of the most commonly used tags on Last FM are "seen live" and "female vocalist" (yes, women have been defined as "the other" again), which aren't traditional genres at all. "Seen live" is obviously personal, and "female singer" isn't a part of the normal lexicon. Looking through people's tags other anomalies crop up - "music that makes me cry" and tags based on where a person intends to listen to the music are examples.

The more obscure genres from Iskur's guide are lost in the noise of random tags that people have made for themselves. I would suggest Gloomcore isn't used in a functional way that 'metal' or 'pop' are. It's a classification that people do not naturally use to denote a particular kind of music on Last FM - perhaps it's a useful term for writing about music, but nobody thinks they'd like to stick on some Gloomcore while they make breakfast.

The more obscure genres from Iskur's guide are lost in the noise of random tags that people have made for themselves. I would suggest Gloomcore isn't used in a functional way that 'metal' or 'pop' are. It's a classification that people do not naturally use to denote a particular kind of music on Last FM - perhaps it's a useful term for writing about music, but nobody thinks they'd like to stick on some Gloomcore while they make breakfast.

I searched the Last FM database of top tags - the 5 tags most used by a user, and assumed that there was a link between any two genres that one person liked. For example, if you have 'gothic' and 'industrial' as top tags then I marked those two tags as linked. In the diagrams below I show the links that occurred between 1000 random Last FM users. If a link between two tags occurred more than about 15 times then it shows up on the diagram below.

Unsurprisingly, indie and rock are things that people often note they have seen live. By contrast, though people might talk of having heard electronic music 'out' (ie. not at home), they don't care enough about it to use define a tag around it.

I was surprised to see tags such as 'British' and 'German', so I broke the above diagram down by country. Last FM has significant UK, German and Japanese user bases. Here is the result for Germany:

Here is the Japanese version:

Yep, plenty of references to Japan. The only nation to feature Jazz too. Here is the British version:

In Japan and Germany a defining feature of music is that it is Japanese or German. In Britain we don't care. I suspect that's because our musical tastes aren't defined against a background of lyrics in a foreign language, as perhaps they are in the other two countries.

Last FM may well have particular 'subculture' of user in each country, so its hard to draw any firm conclusions because of this potential skew. As with so many of the insights you can gain from data gleaned from the web, at the moment it's only possible to tell that one day this kind of tool could be very reveling about our psychology - what it will reveal isn't very clear yet.

None the less, it will be interesting to see how these diagrams evolve over time - perhaps they will gradually diverge from the old names we've used to identify music, or perhaps there will be less and less consensus about what genres are called.

Incidentally, this would have been a post about data from Linked In, looking at the way your professional affects the kind of friendship group you have, but the Linked In API is so restricted that I gave up.

The data is available blow. It's in the .dot format that creates these not very sexy spider diagrams.

http://jimmytidey.co.uk/data/lastfm_genre_links/global.php

http://jimmytidey.co.uk/data/lastfm_genre_links/germany.php

http://jimmytidey.co.uk/data/lastfm_genre_links/japan.php

http://jimmytidey.co.uk/data/lastfm_genre_links/uk.php

I can provide a better version of this data if anyone wants it - send me a message.

Thursday, June 17, 2010

Honey, the kids have attained Gigantick Dimensions

Last week The Royal Society put on display Robert Boyle's wish list of things that science might achieve in the future, written in the 1660s.

What did the acclaimed scientist want? "The Attaining Gigantick Dimensions." Blue sky thinking, far in advance of anything modern ideas people come up with. As the films would have it - "In an age before anesthesia, in a time of brutal civil war, when the black death stalked the land, one man had a dream - to be taller".

There's something about the spelling and the school-boy ambition of it which I love. When Gulliver's Travels was published 35 years after Boyle's death I hope the book was inspired by his desire for greater physical heft. Swift - pleasingly described by Will Self, who has himself toyed with scale in his stories, as satire's Shakespeare - and Boyle were fellow Anglo-Irish theologians.

But how big did Bobby Boyle want to be? Gigantick! Is that the size of a house? So big he had to be accommodated in a cathedral, as with Gulliver? Robert Hook, Boyle's scientific protege, was instrumental in the rebuilding of London after the great fire. Now we know why - he wanted St Paul's as a hanger for his newly upscaled friend to be birthed in.

Perhaps, again like the story, he wanted to be large enough to wade out into the sea and engage in naval warfare by overturning ships. After all, contemporaneously with the publication with the list many worried that the dutch were going to sail up the Thames and take tactical advantage of the recent fire.

I don't think pV = k, the gas law most school children know Boyle for, really does justice to his flights of imagination. Could we have a film from the "Honey I shrunk the kids" franchise to honor him? I think that more accurately reflects his spirit. It's what he would have wanted, when he wasn't pondering "The Emulating of Fish without Engines by Custome and Education". What a guy...

What did the acclaimed scientist want? "The Attaining Gigantick Dimensions." Blue sky thinking, far in advance of anything modern ideas people come up with. As the films would have it - "In an age before anesthesia, in a time of brutal civil war, when the black death stalked the land, one man had a dream - to be taller".

There's something about the spelling and the school-boy ambition of it which I love. When Gulliver's Travels was published 35 years after Boyle's death I hope the book was inspired by his desire for greater physical heft. Swift - pleasingly described by Will Self, who has himself toyed with scale in his stories, as satire's Shakespeare - and Boyle were fellow Anglo-Irish theologians.

But how big did Bobby Boyle want to be? Gigantick! Is that the size of a house? So big he had to be accommodated in a cathedral, as with Gulliver? Robert Hook, Boyle's scientific protege, was instrumental in the rebuilding of London after the great fire. Now we know why - he wanted St Paul's as a hanger for his newly upscaled friend to be birthed in.

Perhaps, again like the story, he wanted to be large enough to wade out into the sea and engage in naval warfare by overturning ships. After all, contemporaneously with the publication with the list many worried that the dutch were going to sail up the Thames and take tactical advantage of the recent fire.

I don't think pV = k, the gas law most school children know Boyle for, really does justice to his flights of imagination. Could we have a film from the "Honey I shrunk the kids" franchise to honor him? I think that more accurately reflects his spirit. It's what he would have wanted, when he wasn't pondering "The Emulating of Fish without Engines by Custome and Education". What a guy...

Wednesday, June 16, 2010

Local by Social - Where sexy social media and bashful local government go to flirt

When you're a nerd like me you it's easy to think that a website is the solution to everything, so I try to remind myself that it probably isn't.

When I went to Local by Social yesterday I was determined to maintain a detached skepticism - either I was seduced by the confrency world of balancing cups and saucers of pump-action coffee while trying to avoid conversational lapses with people I've never met before (unlikely), or I need to be bit more optimistic. Only briefly did the event feel like pie-in-the-sky geekery.

Local by Social was a discussion of the ways in which local governments can utilise social media, taking an extraordinarily broad definition of that term. Topics ranged from the stratospheric stature of reports complied for the Havard Kennedy School of Government to the more prosaic (and eternal) question of how to get doubting officials to engage with social media.

From the more philosophical end of the spectrum I was surprised discover an aspect of the web which I've not really come across before. My assumption was that debate would all be around some variety of communication between government and people, probably something to do with eschewing the broadcast model and adopting a many-to-many, responsive approach to social media - a message we've all heard before.

But Local by Social was way ahead of me with the concept of "social innovation", or "Public Service 2.0" (I've never heard the "2.0" suffix used so much). The concept is to close the loop of Official-Public-Official conversation by having, to varying degrees, the public actually solve problems themselves.

Examples included Washington's "Snowageedeon", where citizens used Google maps to allocate the work of clearing snow between themselves when the authorities were overwhelmed and Brighton Council using Twitter to find volunteer van drivers for meals on wheels during another bout inclemency. Or Fix My Tweet, which allows you to tell the council where pot holes are. The difference here is that work that would once have been carried out by an authority is being done for free through social media magic.

Instead of hulking, snail-paced governmental organisations facilitating this processes we were asked to imagine social entrepreneurs setting up not-for-profits to delivering these types of service. The question of what would happen when someone tried to monetise their successful website was left hanging in the air. Being new to the world of e-Government I was surprised these stories don’t seem to be more prevalent in the general web-trend reporting press, but perhaps that’s because the sites I follow tend to come from the marketing angle.

Another theme was adversity as a catalyst. The above examples were not the only times that snow precipitated social innovation. But, as Dominic Campbell (founder f FutureGov) explained in literally Churchillean terms ("We will innovate on the beaches..."), the most important opportunity in adversity was the coming cuts in public spending. Referencing Schumpeter's creative destruction he pointed out that more than ever authorities are receptive to novel cost-saving ideas. Big stupid bureaucracies listening to exciting web start ups to good to be true? Well, if you listen to David Cameron's TED Talk and his Big Society rhetoric (which I willing to concede might be just slightly more than electioneering) then perhaps there is room for a chink of optimism.

Am I maintaining detached skepticism? I'm trying... honest. Here's a dose: Twitter doesn't seem to me like it's important for local councils. In a break-out session at the end of the day a lot of local government people were bemoaning the fact that councillors wouldn't Tweet, but I don't think they really need to.

It might be useful as a means of disseminating press releases to journalists, but that doesn't mean that you can garner the ear of the populous using Twitter. Only 30,000 Twitter users tweeted about the election on election night. That's one in 2,000 people, and a close run general election is a lot more interesting than local government.

Much more exciting to me is the possibility of local government making itself known on existing localised communities. Filippo Ciampini, who is writing a masters on the subject of government public relations, told me that there are lot's of ethnically based online communities and forums - citing Islington's Chinese forum as a vibrant example - which seems to me the perfect place to use the web to communicate with hard-to-reach people. Filippo added that although they are mainly used by second and third generation immigrants, these are the people who probably have most contact with first generation immigrants - a group that the council traditionally has great difficulty reaching.

None of the local government mandarins in our group said their council was using existing message boards as a means of outreach, one indicated that there was a perception that to engage on these forums might somehow undermine the legitimacy of local government's voice.

But it's such a missed opportunity. Hugh Flouch of Harringay Online told us that his hyper-local community has 3000 members (though we might question how many are active), while the area it covers has a population on 17,000. As he pointed out, that's more penetration that BBC Two.

Little tidbit from our break out group: apparently Coca Cola's social media principles are a great place to start if you need to write guidelines for an organisation that is precious about it's brand, on which front I'm sure Coca Cola is unimpeachable.

We also discussed the fear many higher up the organisational chain have of saying the wrong thing online. I don't think there is anywhere to hide from the internet's wrath. Recently a representative of Hackney council gave duff information on the phone (decidedly an old medium), only to find a recording posted on Private Eye's website (incidentally, also a Luddite publication which dislikes the internet). So it's time for local councils to take a dip their toes into the wide social media sea, it might not look enticing, but sooner or later someone is going to throw you in anyway.

Have I trodden the line of disinterested rationality? I hope so, but to end on a positive note, the more you engage with people on the web the more they love you. Paul Hodgkin of Patient Opinion told us that his service which allows patients to... give their opinion receives only around 20% purely negative feedback. Amazingly, after they have been moderated, about 10% of feedback leads to some kind of change is hospital practice, and the 20% of feedback which was completely positive was a huge boost to staff morale.

Research from Hugo Flouch (he of Harringay Online) suggests that the more officials engage with online communities the better users perceptions of them are. That was true for MPs, council workers, councillors themselves and police.

So, to sum up in a completely equivocal manner: budget cuts lead to a world where municipal work is carried out for free by the people who do it out the goodness of their own hearts and then thank each other through feedback services provided by not-for-profit social entrepreneurs. And no one who isn’t completely comfortable with Twitter will be forced to use it as part of a box-ticking drive to engage with Interweb 2.0.

When I went to Local by Social yesterday I was determined to maintain a detached skepticism - either I was seduced by the confrency world of balancing cups and saucers of pump-action coffee while trying to avoid conversational lapses with people I've never met before (unlikely), or I need to be bit more optimistic. Only briefly did the event feel like pie-in-the-sky geekery.

Local by Social was a discussion of the ways in which local governments can utilise social media, taking an extraordinarily broad definition of that term. Topics ranged from the stratospheric stature of reports complied for the Havard Kennedy School of Government to the more prosaic (and eternal) question of how to get doubting officials to engage with social media.

From the more philosophical end of the spectrum I was surprised discover an aspect of the web which I've not really come across before. My assumption was that debate would all be around some variety of communication between government and people, probably something to do with eschewing the broadcast model and adopting a many-to-many, responsive approach to social media - a message we've all heard before.

But Local by Social was way ahead of me with the concept of "social innovation", or "Public Service 2.0" (I've never heard the "2.0" suffix used so much). The concept is to close the loop of Official-Public-Official conversation by having, to varying degrees, the public actually solve problems themselves.

Examples included Washington's "Snowageedeon", where citizens used Google maps to allocate the work of clearing snow between themselves when the authorities were overwhelmed and Brighton Council using Twitter to find volunteer van drivers for meals on wheels during another bout inclemency. Or Fix My Tweet, which allows you to tell the council where pot holes are. The difference here is that work that would once have been carried out by an authority is being done for free through social media magic.

Instead of hulking, snail-paced governmental organisations facilitating this processes we were asked to imagine social entrepreneurs setting up not-for-profits to delivering these types of service. The question of what would happen when someone tried to monetise their successful website was left hanging in the air. Being new to the world of e-Government I was surprised these stories don’t seem to be more prevalent in the general web-trend reporting press, but perhaps that’s because the sites I follow tend to come from the marketing angle.

Another theme was adversity as a catalyst. The above examples were not the only times that snow precipitated social innovation. But, as Dominic Campbell (founder f FutureGov) explained in literally Churchillean terms ("We will innovate on the beaches..."), the most important opportunity in adversity was the coming cuts in public spending. Referencing Schumpeter's creative destruction he pointed out that more than ever authorities are receptive to novel cost-saving ideas. Big stupid bureaucracies listening to exciting web start ups to good to be true? Well, if you listen to David Cameron's TED Talk and his Big Society rhetoric (which I willing to concede might be just slightly more than electioneering) then perhaps there is room for a chink of optimism.

Am I maintaining detached skepticism? I'm trying... honest. Here's a dose: Twitter doesn't seem to me like it's important for local councils. In a break-out session at the end of the day a lot of local government people were bemoaning the fact that councillors wouldn't Tweet, but I don't think they really need to.

It might be useful as a means of disseminating press releases to journalists, but that doesn't mean that you can garner the ear of the populous using Twitter. Only 30,000 Twitter users tweeted about the election on election night. That's one in 2,000 people, and a close run general election is a lot more interesting than local government.

Much more exciting to me is the possibility of local government making itself known on existing localised communities. Filippo Ciampini, who is writing a masters on the subject of government public relations, told me that there are lot's of ethnically based online communities and forums - citing Islington's Chinese forum as a vibrant example - which seems to me the perfect place to use the web to communicate with hard-to-reach people. Filippo added that although they are mainly used by second and third generation immigrants, these are the people who probably have most contact with first generation immigrants - a group that the council traditionally has great difficulty reaching.

None of the local government mandarins in our group said their council was using existing message boards as a means of outreach, one indicated that there was a perception that to engage on these forums might somehow undermine the legitimacy of local government's voice.

But it's such a missed opportunity. Hugh Flouch of Harringay Online told us that his hyper-local community has 3000 members (though we might question how many are active), while the area it covers has a population on 17,000. As he pointed out, that's more penetration that BBC Two.

Little tidbit from our break out group: apparently Coca Cola's social media principles are a great place to start if you need to write guidelines for an organisation that is precious about it's brand, on which front I'm sure Coca Cola is unimpeachable.

We also discussed the fear many higher up the organisational chain have of saying the wrong thing online. I don't think there is anywhere to hide from the internet's wrath. Recently a representative of Hackney council gave duff information on the phone (decidedly an old medium), only to find a recording posted on Private Eye's website (incidentally, also a Luddite publication which dislikes the internet). So it's time for local councils to take a dip their toes into the wide social media sea, it might not look enticing, but sooner or later someone is going to throw you in anyway.

Have I trodden the line of disinterested rationality? I hope so, but to end on a positive note, the more you engage with people on the web the more they love you. Paul Hodgkin of Patient Opinion told us that his service which allows patients to... give their opinion receives only around 20% purely negative feedback. Amazingly, after they have been moderated, about 10% of feedback leads to some kind of change is hospital practice, and the 20% of feedback which was completely positive was a huge boost to staff morale.

Research from Hugo Flouch (he of Harringay Online) suggests that the more officials engage with online communities the better users perceptions of them are. That was true for MPs, council workers, councillors themselves and police.

So, to sum up in a completely equivocal manner: budget cuts lead to a world where municipal work is carried out for free by the people who do it out the goodness of their own hearts and then thank each other through feedback services provided by not-for-profit social entrepreneurs. And no one who isn’t completely comfortable with Twitter will be forced to use it as part of a box-ticking drive to engage with Interweb 2.0.

Thursday, May 13, 2010

We know that David Cameron doesn't like Twitter - what does it think of him?

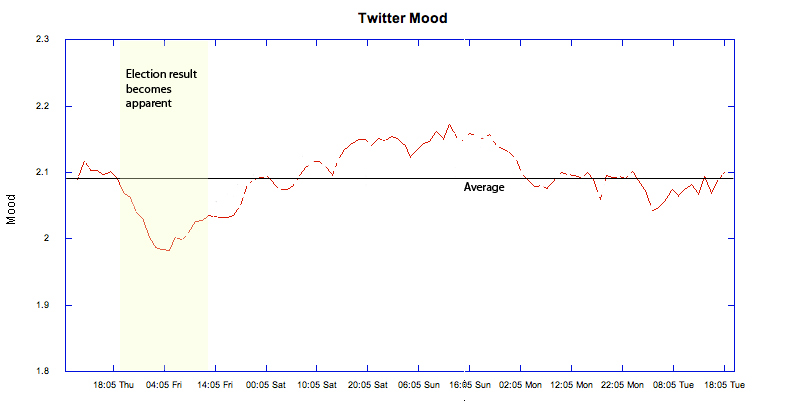

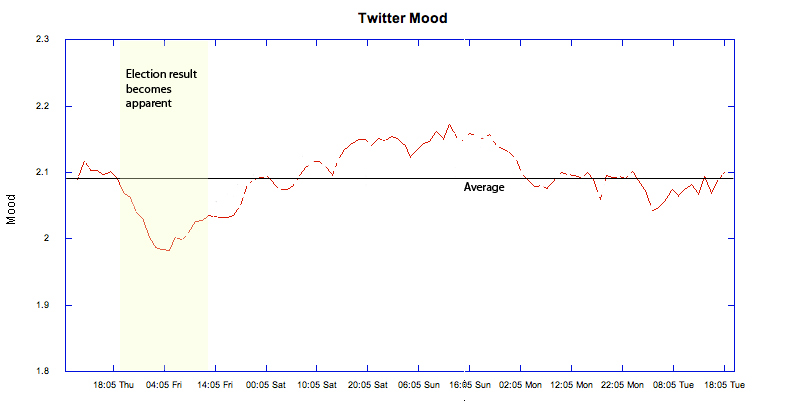

Over the course of the General Election I recorded 1000 random tweets every hour and sent them to tweetsentiments.com for sentiment analysis.

Tweetsentiment have a service which gives one of three values to each tweet. '0' means a negative sentiment (unhappy tweet), '2' a neutral or undetermined sentiment and '4' positive (happy tweet). Similar technology is used to detect levels of customer satisfaction at call centres by monitoring phone calls.

Obviously it's difficult for a machine to detect the emotional meaning of a sentence, especially with the strange conventions used on Twitter. Despite this Tweetsentiment seems to be fairly reliable - tweets always which express happy emotions tend to be rated as such, and vice verse. More accurately, if Tweetsentiment does make a classification it tends to get it right. Sometimes an obviously positive / negative tweet gets a '2', but that shouldn't affect things here.

My hypothesis was that the Twitterati would be less happy if there was a Conservative victory. Of course I can’t prove that Twitter has a bias to the left, but I would presume that young, techy, early adopters are more likely to be left leaning. The reaction to the Jan Moir Stephen Gately article perhaps supports this.

David Cameron famously noted that Twitter is for twats, I wondered if Twitter would reciprocate...

The graph indicates that usually Twitter is just slightly positive, with a mood value of 2.1 on average. As predicted, as a conservative victory becomes apparent on Thursday evening there is a decline in mood which lasts until Saturday lunchtime. Then everyone cheers up, presumably goes down the pub, and is pretty chirpy for Sunday lunch. Sentiment only returns to average for the beginning of work on Monday morning.

In short, it does look like the election result was a disappointment to Twitter.

Obviously we need to know what normal Twitter behaviour is over the course of the week to draw very much information from the graph, and this is something that I’m going to try and produce a graph for soon.

It does look as though the size of negative reaction to a once-a-decade change in government is about the same magnitude as the positive mood elicited by the prospect of Sunday lunch - which I think is fairly consistent with the vicissitudes of Twitter as I experienced them.

I used Twitter’s API to gather the data, and frankly, it’s not particularly great, particularly if you want to get Tweets from the past. I was surprised to discover that any Tweets more than about 24 hours old simply disappear from the search function on Twitter.com – in effect they only exist in public for a day. For this reason the hourly sample size wasn’t always exactly 1000, but it was on average.

I’ll post again when I have some more data on normal behaviour. I’m also curious to find out if different countries have different average happiness levels on Twitter, but I think finding a Tweetsentiment-style service for other languages might prove difficult.

Tweetsentiment have a service which gives one of three values to each tweet. '0' means a negative sentiment (unhappy tweet), '2' a neutral or undetermined sentiment and '4' positive (happy tweet). Similar technology is used to detect levels of customer satisfaction at call centres by monitoring phone calls.

Obviously it's difficult for a machine to detect the emotional meaning of a sentence, especially with the strange conventions used on Twitter. Despite this Tweetsentiment seems to be fairly reliable - tweets always which express happy emotions tend to be rated as such, and vice verse. More accurately, if Tweetsentiment does make a classification it tends to get it right. Sometimes an obviously positive / negative tweet gets a '2', but that shouldn't affect things here.

My hypothesis was that the Twitterati would be less happy if there was a Conservative victory. Of course I can’t prove that Twitter has a bias to the left, but I would presume that young, techy, early adopters are more likely to be left leaning. The reaction to the Jan Moir Stephen Gately article perhaps supports this.

David Cameron famously noted that Twitter is for twats, I wondered if Twitter would reciprocate...

The graph indicates that usually Twitter is just slightly positive, with a mood value of 2.1 on average. As predicted, as a conservative victory becomes apparent on Thursday evening there is a decline in mood which lasts until Saturday lunchtime. Then everyone cheers up, presumably goes down the pub, and is pretty chirpy for Sunday lunch. Sentiment only returns to average for the beginning of work on Monday morning.

In short, it does look like the election result was a disappointment to Twitter.

Obviously we need to know what normal Twitter behaviour is over the course of the week to draw very much information from the graph, and this is something that I’m going to try and produce a graph for soon.

It does look as though the size of negative reaction to a once-a-decade change in government is about the same magnitude as the positive mood elicited by the prospect of Sunday lunch - which I think is fairly consistent with the vicissitudes of Twitter as I experienced them.

I used Twitter’s API to gather the data, and frankly, it’s not particularly great, particularly if you want to get Tweets from the past. I was surprised to discover that any Tweets more than about 24 hours old simply disappear from the search function on Twitter.com – in effect they only exist in public for a day. For this reason the hourly sample size wasn’t always exactly 1000, but it was on average.

I’ll post again when I have some more data on normal behaviour. I’m also curious to find out if different countries have different average happiness levels on Twitter, but I think finding a Tweetsentiment-style service for other languages might prove difficult.

Tuesday, May 4, 2010

Michael Jackson is more important than Jesus. Fact.

My last post used Wikipedia’s list of dates of births and deaths to build a timeline showing the lifespans of people who have pages on Wikipedia. There are a lot of people with Wikipedia pages, so I limited it to only include dead people.

That still leaves you with a lot of people to fit on one timeline, so I wanted to prioritise ‘important’ or ‘interesting’ people at the top and show only the most 'important' 1000. Some have been confused by my method for doing this, and others have questioned its validity, so this post will address both issues. I’m also going to suggest an improvement. It turns out that whatever I do Michael Jackson is more important than Jesus. I'm just the messenger.

Explaining the method

To get a measure of ‘importance’ I used work done by Stephan Dolan. He has developed a system for ranking Wikipedia pages which is very similar to the PageRank system which Google uses to prioritise its search results.

Wikipedia’s pages link to one another, and Stephan Dolan’s algorithm gives a measure of well linked to all the other Wikipedia pages a particular page is. If we want to know how well linked in the page about Charles Darwin is the algorithm examines every other page in Wikipedia and works out how many links you would have to follow to get from the page it is examining to the Charles Darwin page using the shortest route.

For example, to get from Aldous Huxley to Charles Darwin takes two links, one from Aldous to Thomas Henry Huxley (Aldous’s father) and then another to Darwin (TH Huxley famously defended evolution as a theory). Dolan’s method calculates the average number of clicks from every page in Wikipedia to the Charles Darwin page, and then takes an average value. To get to Charles Darwin takes an average 3.88 clicks from other Wikipedia pages.

Equivalently, Google shows pages that have many links pointing to them nearer the top in its search results.

This method works OK, but it could be better. For example Mircea Eliade ranks as the fifth most important dead person dead person on Wikipedia, taking on average 3.78 clicks to find him. But Mircea Eliade is a Romanian historian of religion - hardly a household name. We can take this as a positive statement, perhaps Mircea Eliade is a figure of hither to unrecognised importance and influence. On the other hand it seems impossible that he can be more ‘important’ than Darwin.

Testing the validity of the Dolan Index

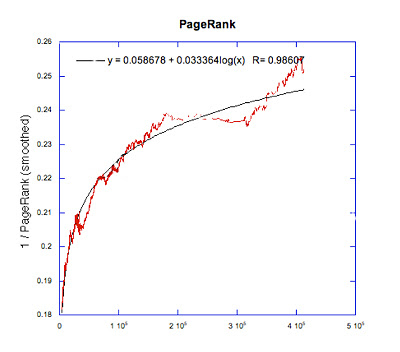

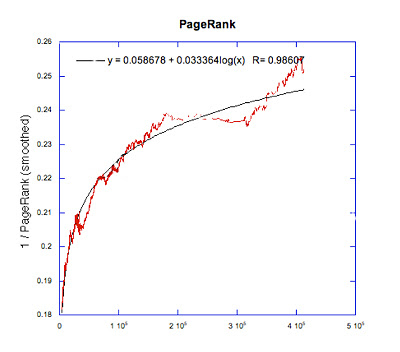

I decided it would be interesting to compare what I’m going to call the Dolan index (the average number of clicks as described above) with two other metrics that could be construed as measuring the importance of a person. Before we do that, here is a Graph of what the Dolan index of dead people on Wikipedia looks like.

The bottom axis shows the rank order of pages, from Pope John Paul II, who is has the 275th highest Dolan index on Wikipedia, to Zi Pitcher, who comes 430900th in terms of Dolan index. It makes a very tidy log plot.

As I mentioned previously, the Dolan index is very similar to a Google PageRank, so lets compare them.

The x axis is the same as the first graph, Wikipedia pages from highest to lowest Dolan index. A well linked page has a low Dolan index, but a High PageRank, so I used the reciprocal of PageRank for the y axis. I've also added a log best fit line.

Comparing with PageRank seems to indicate there is a reasonable correlation between Dolan index and PageRank, which is indicated by the fact the first and second graphs have a similar shape.

PageRank is only given in integer values between 1-10 (realistically, all Wikipedia pages have a PageRank between 3-7), so I’ve smoothed the curve using a moving average.

This seems to lend some weight to the Dolan Index as a measure.

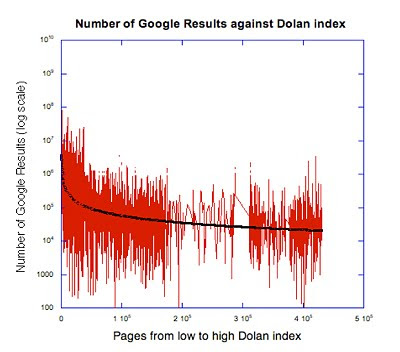

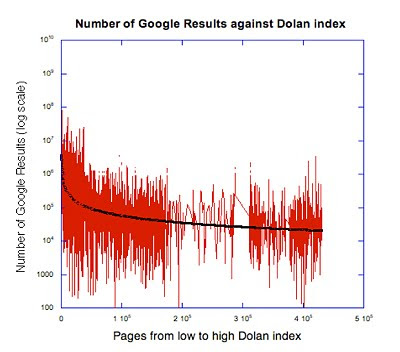

I’ve also made a comparison between the Dolan index the number of results returned when searching for a person’s name (without quotes) in Google search. It should be noted that this number seems to be quite unstable - a search will give a slightly different number of results from one day to the next. I’ve used a log scale because of the range of results.

There is barely any correlation here, except a very low values of Dolan index. Despite this, it’s still possible for the number of Google results to be useful, as becomes in apparent when trying to improve my measure of ‘importance’.

A suggestion for improvement

The problem with all the measures seems to be the noise inherent in the system. While Dolan Index, PageRank and number of Google results all provide a rough guide to ‘importance’ or ‘interest’ overall, each of them frequently gives unlikely results. How about using a mixture of all three? Here is a table comparing the top 25 dead people by Dolan index and using a hybrid measure of importance constructed from all three metrics.

To get the hybrid measure I just messed around until things felt right. Here is the formula I came up with:

Hybrid measure = ((1/Dolan index)x 20) + (PageRank x0.6) + (log(number of results)x 0.6)

For some reason additive formulas give better results than multiplicative ones.

Using the hybrid measure seems to have removed the surprises (like Peter Jennings) although you might still argue that Oscar Wilde or Jimi Hendrix are much too high. Michael Jackson comes out as bigger than Jesus, but then he is an exceptionally famous person, and he died much more recently than Jesus. Timur (AKA Tamerlane) is a bit of a curiosity.

I considered ignoring Number of Google results because its such a noisy dataset, however it’s the only reason that Jesus appears at all in this list, he gets a very low ranking (4.01) from the Dolan Index. Any formula which brings Jesus out on top (which I think you could make a reasonable case for his deserving, at least over Michael Jackson!), gives all kinds of strage results elsewhere.

I am a bit suspicious of "number of google results" metric. In addition to volatility Number of results fails to take into account that occurrences of words such as "Newtonian" should probably count towards Newton's ranking, but that people called David Mitchell will benefit artificially from the fact that at least two famous people share the name.

Any further investigation would have to consider what made a person ‘important’ – would it simply be how prominent they are in the minds of people (Michael Jackson and Jimi Hendrix) or would it reflect how influential they were (Charles Darwin for example, or the notably absent Karl Marx)?

I love the idea that the web reflects the collective conciousness, a kind of super-brain aggregation of human knowlege.

Just this week the idea of reflecting the whole of reality in one enormous computer system was promoted by Dirk Helbing, although my formula doesn't rate him as very important, so I'm unsure as to how seriously to take this.

That still leaves you with a lot of people to fit on one timeline, so I wanted to prioritise ‘important’ or ‘interesting’ people at the top and show only the most 'important' 1000. Some have been confused by my method for doing this, and others have questioned its validity, so this post will address both issues. I’m also going to suggest an improvement. It turns out that whatever I do Michael Jackson is more important than Jesus. I'm just the messenger.

Explaining the method

To get a measure of ‘importance’ I used work done by Stephan Dolan. He has developed a system for ranking Wikipedia pages which is very similar to the PageRank system which Google uses to prioritise its search results.

Wikipedia’s pages link to one another, and Stephan Dolan’s algorithm gives a measure of well linked to all the other Wikipedia pages a particular page is. If we want to know how well linked in the page about Charles Darwin is the algorithm examines every other page in Wikipedia and works out how many links you would have to follow to get from the page it is examining to the Charles Darwin page using the shortest route.

For example, to get from Aldous Huxley to Charles Darwin takes two links, one from Aldous to Thomas Henry Huxley (Aldous’s father) and then another to Darwin (TH Huxley famously defended evolution as a theory). Dolan’s method calculates the average number of clicks from every page in Wikipedia to the Charles Darwin page, and then takes an average value. To get to Charles Darwin takes an average 3.88 clicks from other Wikipedia pages.

Equivalently, Google shows pages that have many links pointing to them nearer the top in its search results.

This method works OK, but it could be better. For example Mircea Eliade ranks as the fifth most important dead person dead person on Wikipedia, taking on average 3.78 clicks to find him. But Mircea Eliade is a Romanian historian of religion - hardly a household name. We can take this as a positive statement, perhaps Mircea Eliade is a figure of hither to unrecognised importance and influence. On the other hand it seems impossible that he can be more ‘important’ than Darwin.

Testing the validity of the Dolan Index

I decided it would be interesting to compare what I’m going to call the Dolan index (the average number of clicks as described above) with two other metrics that could be construed as measuring the importance of a person. Before we do that, here is a Graph of what the Dolan index of dead people on Wikipedia looks like.

The bottom axis shows the rank order of pages, from Pope John Paul II, who is has the 275th highest Dolan index on Wikipedia, to Zi Pitcher, who comes 430900th in terms of Dolan index. It makes a very tidy log plot.

As I mentioned previously, the Dolan index is very similar to a Google PageRank, so lets compare them.

The x axis is the same as the first graph, Wikipedia pages from highest to lowest Dolan index. A well linked page has a low Dolan index, but a High PageRank, so I used the reciprocal of PageRank for the y axis. I've also added a log best fit line.

Comparing with PageRank seems to indicate there is a reasonable correlation between Dolan index and PageRank, which is indicated by the fact the first and second graphs have a similar shape.

PageRank is only given in integer values between 1-10 (realistically, all Wikipedia pages have a PageRank between 3-7), so I’ve smoothed the curve using a moving average.

This seems to lend some weight to the Dolan Index as a measure.

I’ve also made a comparison between the Dolan index the number of results returned when searching for a person’s name (without quotes) in Google search. It should be noted that this number seems to be quite unstable - a search will give a slightly different number of results from one day to the next. I’ve used a log scale because of the range of results.

There is barely any correlation here, except a very low values of Dolan index. Despite this, it’s still possible for the number of Google results to be useful, as becomes in apparent when trying to improve my measure of ‘importance’.

A suggestion for improvement

The problem with all the measures seems to be the noise inherent in the system. While Dolan Index, PageRank and number of Google results all provide a rough guide to ‘importance’ or ‘interest’ overall, each of them frequently gives unlikely results. How about using a mixture of all three? Here is a table comparing the top 25 dead people by Dolan index and using a hybrid measure of importance constructed from all three metrics.

| Dolan index | Hybrid measure |

| Pope John Paul II | Michael Jackson |

| Michael Jackson | Jesus |

| John F. Kennedy | Ronald Reagan |

| Gerald Ford | Jimi Hendrix |

| Mircea Eliade | Abraham Lincoln |

| Peter Jennings | Adolf Hitler |

| John Lennon | Albert Einstein |

| Adolf Hitler | William Shakespeare |

| Harry S. Truman | Charles Darwin |

| Rold Reagan | Oscar Wilde |

| J. R. R. Tolkien | Woodrow Wilson |

| James Brown | Isaac Newton |

| Anthony Burgess | Elvis Presley |

| Elvis Presley | Walt Disney |

| Christopher Reeve | John Lennon |

| Susan Oliver | George Washington |

| Franklin D. Roosevelt | John F. Kennedy |

| Winston Churchill | Timur |

| Ernest Hemingway | Martin Luther |

| Theodore Roosevelt | Voltaire |

To get the hybrid measure I just messed around until things felt right. Here is the formula I came up with:

Hybrid measure = ((1/Dolan index)x 20) + (PageRank x0.6) + (log(number of results)x 0.6)

For some reason additive formulas give better results than multiplicative ones.

Using the hybrid measure seems to have removed the surprises (like Peter Jennings) although you might still argue that Oscar Wilde or Jimi Hendrix are much too high. Michael Jackson comes out as bigger than Jesus, but then he is an exceptionally famous person, and he died much more recently than Jesus. Timur (AKA Tamerlane) is a bit of a curiosity.

I considered ignoring Number of Google results because its such a noisy dataset, however it’s the only reason that Jesus appears at all in this list, he gets a very low ranking (4.01) from the Dolan Index. Any formula which brings Jesus out on top (which I think you could make a reasonable case for his deserving, at least over Michael Jackson!), gives all kinds of strage results elsewhere.

I am a bit suspicious of "number of google results" metric. In addition to volatility Number of results fails to take into account that occurrences of words such as "Newtonian" should probably count towards Newton's ranking, but that people called David Mitchell will benefit artificially from the fact that at least two famous people share the name.

Any further investigation would have to consider what made a person ‘important’ – would it simply be how prominent they are in the minds of people (Michael Jackson and Jimi Hendrix) or would it reflect how influential they were (Charles Darwin for example, or the notably absent Karl Marx)?

I love the idea that the web reflects the collective conciousness, a kind of super-brain aggregation of human knowlege.

Just this week the idea of reflecting the whole of reality in one enormous computer system was promoted by Dirk Helbing, although my formula doesn't rate him as very important, so I'm unsure as to how seriously to take this.

Sunday, April 11, 2010

Wikipedia Book of the Dead

DBpedia mashup: the most important dead people according to Wikipedia

The timeline below shows the names of dead people and their lifespans, as retrieved from Wikipedia. They are arranged so that people nearer the top are the best linked in on Wikipedia, as measured by the average number of clicks it would take to get from any Wikipedia page to the page of the person in question.

I had imagined that Wikipedia 'linkedin-ness' would serve as a proxy for celebrity, which it kind of does - but only in a lose way.

Values range from 3.72 (at the top) to 4.04 (at the bottom). This means that if you were to navigate from a large number of Wikipedia pages, using only internal Wikipedia links, it would take you, on average, 3.72 clicks to get to Pope John Paul II. This data set was made by Stephan Dolan, who explains the concept better than me. Basically, it's the 6 degrees of Kevin Bacon on Wikipedia.

I looped through the data set and queried DBpedia to see if the Wikipedia article was about a person, and if so retrieved their dates of birth and death.

The timeline does show a certain amnesia on the part of Wikipedia, Shakespeare and Newton are absent, while Romainian historian of religion Mircea Eliade comes 5th. If I had included people who are alive tennis players would have dominated the list (I don't know why) - Billie Jean King is the second best-linked article on wikipedia, one ahead of the USA (the UK is number one!).

Any mistakes (I have seen some) are due to the sketchiness of the DBpedia data, though I can't rule out having made some mistakes myself...

There results are limited to the top 1000, and they only go back to 1650. Almost no names previous to 1650 appeared, the exceptions being Jesus (who was still miles down) and Guy Fawkes.

In case you were wondering 'Who's Saul Bellow below?', the answer is Rudolph Hess.

The timeline below shows the names of dead people and their lifespans, as retrieved from Wikipedia. They are arranged so that people nearer the top are the best linked in on Wikipedia, as measured by the average number of clicks it would take to get from any Wikipedia page to the page of the person in question.

I had imagined that Wikipedia 'linkedin-ness' would serve as a proxy for celebrity, which it kind of does - but only in a lose way.

Values range from 3.72 (at the top) to 4.04 (at the bottom). This means that if you were to navigate from a large number of Wikipedia pages, using only internal Wikipedia links, it would take you, on average, 3.72 clicks to get to Pope John Paul II. This data set was made by Stephan Dolan, who explains the concept better than me. Basically, it's the 6 degrees of Kevin Bacon on Wikipedia.

I looped through the data set and queried DBpedia to see if the Wikipedia article was about a person, and if so retrieved their dates of birth and death.

The timeline does show a certain amnesia on the part of Wikipedia, Shakespeare and Newton are absent, while Romainian historian of religion Mircea Eliade comes 5th. If I had included people who are alive tennis players would have dominated the list (I don't know why) - Billie Jean King is the second best-linked article on wikipedia, one ahead of the USA (the UK is number one!).

Any mistakes (I have seen some) are due to the sketchiness of the DBpedia data, though I can't rule out having made some mistakes myself...

There results are limited to the top 1000, and they only go back to 1650. Almost no names previous to 1650 appeared, the exceptions being Jesus (who was still miles down) and Guy Fawkes.

In case you were wondering 'Who's Saul Bellow below?', the answer is Rudolph Hess.

Sunday, February 7, 2010

Smoke Stacks to Apple Macs

The digital revolution will not be televised - to the contrary, is it possible that no artist or medium can be said to have adequately addressed the information age?

Zizek once sumerised Marx as having said that the invention of steam engine caused more social change than any revolution ever would. Marx himself doesn't seem to have provided a useful soundbite to this effect (at least not one that I can find though Google), so I'm afraid it will have to remain second hand. It's a powerful sentiment, whoever originated it - which philosopher's views cannot be analyzed as the product of the social and technological novelties of his day?

It's easy to see that the technology that is most salient in our age is the internet, which has been made possible by consumer electronics. Have our philosophers stepped forward to engage with the latest technological crop?

Moving on from philosophers, what of our artists? Will Gompertz recently posted to share an apparently widely held view that no piece of art has yet spoken eloquently from or about the internet. He cites Turner prize winning Jeremy Deller describing "a post-warholian" era, presumably indicating that Warhol was last person to adequately reference technological change in the guise of mass production. I wonder if the Saatchi-fueled infloresence has also captured something of marketing-led landscape we currently live in, but whatever the last sufficient reflection on cultural change afforded by art was, I think we may be on safe ground in stating that the first widely accepted visual aperçus of the digital era is still to come.

Which is some surprise when you consider, for example, how engaged the news agenda is with technology: I was amazed to see that Google's Wave technology (still barely incipient) got substantial coverage on BBC news.

With my employment centering on the web, and my pretensions at cultural engagement, this weekend I visited the Kinetica Art Fair. Kinetica is a museum which aims to 'encourage convergence of art and technology'. The fair certainly captured one aspect of contemporary mood - a very reasonably priced bar was a welcome response to our collective financial deficit.

Standout pieces included a cleverly designed mechanical system for tracing the contours of plaster bust onto a piece of paper and a strangely terrifying triangular mirror with mechanically operated metal rods. It looked like a Buck Rogers inspired torture device designed to inflict pain by a method so awful that you'd have to see it in operation before its evil would be comprehensible. The other works included a urinal which provided an opportunity for punters to simulate pan-global urination (sadly not with real urine) by providing a jet of water and a globe in a urinal. I would defy anyone not to be entertained by spending time wondering round the the fair.

However, Will Gompertz's challenge was not answered at Kinetica - the essence of the technological modernity was distilled into any of work - not even slightly.

I've been mulling over various possible reasons for this failure, and quite a few suggestions spring to mind. Do computers naturally alienate artists? Is information technology to visually banal to be characterised succinctly?

I'd like to suggest that its the transitory nature of our electronic lives that makes them so hard to pin down. Mobile phones, web sites, computers and opperating systems from a decade ago all look ludicrously dated - it's almost impossible to capture the platonic form of these items because they have so little essential similarity. Moreover, their form is almost an accident, and not connected with their more profound meaning in any way. The boats of the merchantile age and the smoke stacks of the industrial age all seem to denote something broader - how can communism be separated from its tractors? Yet the form factor of my computer is trivial. Form and functional significance are of necessity separated by digital goods, their flexibility is the source of their power.

In someway I think films give us tacit acknowledgment of the contingent nature of the digital environment that we spend much of our lives in: no protagonist is ever seen using Windows on their computer, in films computer's interfaces are always generic. When we see a Mac in a film it impossible to see it as anything other than product placement.

So, the Kinetica Art Fair may not have been able to help society understand its relationship with technology, but actually, despite their rhetoric, I think it was a little unfair to expect it to. Really the fair was about works facilitated by technology, rather than about it.

But, in case you think I've picked a straw man in Kentica, let me say that the V&As ongoing exhibition Decode really does no better, though its failures and successes are another topic.

Zizek once sumerised Marx as having said that the invention of steam engine caused more social change than any revolution ever would. Marx himself doesn't seem to have provided a useful soundbite to this effect (at least not one that I can find though Google), so I'm afraid it will have to remain second hand. It's a powerful sentiment, whoever originated it - which philosopher's views cannot be analyzed as the product of the social and technological novelties of his day?

It's easy to see that the technology that is most salient in our age is the internet, which has been made possible by consumer electronics. Have our philosophers stepped forward to engage with the latest technological crop?

Moving on from philosophers, what of our artists? Will Gompertz recently posted to share an apparently widely held view that no piece of art has yet spoken eloquently from or about the internet. He cites Turner prize winning Jeremy Deller describing "a post-warholian" era, presumably indicating that Warhol was last person to adequately reference technological change in the guise of mass production. I wonder if the Saatchi-fueled infloresence has also captured something of marketing-led landscape we currently live in, but whatever the last sufficient reflection on cultural change afforded by art was, I think we may be on safe ground in stating that the first widely accepted visual aperçus of the digital era is still to come.

Which is some surprise when you consider, for example, how engaged the news agenda is with technology: I was amazed to see that Google's Wave technology (still barely incipient) got substantial coverage on BBC news.

With my employment centering on the web, and my pretensions at cultural engagement, this weekend I visited the Kinetica Art Fair. Kinetica is a museum which aims to 'encourage convergence of art and technology'. The fair certainly captured one aspect of contemporary mood - a very reasonably priced bar was a welcome response to our collective financial deficit.

Standout pieces included a cleverly designed mechanical system for tracing the contours of plaster bust onto a piece of paper and a strangely terrifying triangular mirror with mechanically operated metal rods. It looked like a Buck Rogers inspired torture device designed to inflict pain by a method so awful that you'd have to see it in operation before its evil would be comprehensible. The other works included a urinal which provided an opportunity for punters to simulate pan-global urination (sadly not with real urine) by providing a jet of water and a globe in a urinal. I would defy anyone not to be entertained by spending time wondering round the the fair.

However, Will Gompertz's challenge was not answered at Kinetica - the essence of the technological modernity was distilled into any of work - not even slightly.

I've been mulling over various possible reasons for this failure, and quite a few suggestions spring to mind. Do computers naturally alienate artists? Is information technology to visually banal to be characterised succinctly?

I'd like to suggest that its the transitory nature of our electronic lives that makes them so hard to pin down. Mobile phones, web sites, computers and opperating systems from a decade ago all look ludicrously dated - it's almost impossible to capture the platonic form of these items because they have so little essential similarity. Moreover, their form is almost an accident, and not connected with their more profound meaning in any way. The boats of the merchantile age and the smoke stacks of the industrial age all seem to denote something broader - how can communism be separated from its tractors? Yet the form factor of my computer is trivial. Form and functional significance are of necessity separated by digital goods, their flexibility is the source of their power.

In someway I think films give us tacit acknowledgment of the contingent nature of the digital environment that we spend much of our lives in: no protagonist is ever seen using Windows on their computer, in films computer's interfaces are always generic. When we see a Mac in a film it impossible to see it as anything other than product placement.

So, the Kinetica Art Fair may not have been able to help society understand its relationship with technology, but actually, despite their rhetoric, I think it was a little unfair to expect it to. Really the fair was about works facilitated by technology, rather than about it.

But, in case you think I've picked a straw man in Kentica, let me say that the V&As ongoing exhibition Decode really does no better, though its failures and successes are another topic.

Monday, February 1, 2010

Internet Protocol and HRH the Queen

Whatever we end up using the web for, don't the world's citizens lead more equal lives if they are all mediated by the same technology?

The queen tweets. She's commissioned a special jewel embossed netbook and a bespoke Twitter client with skinned with ermine and sable.

I made that up. For starters, she hasn't actually started tweeting - there is a generic royal feed which announces the various visits and condescensions of Britain's feudal anachronism, but nothing from miss fancy hat herself. Perhaps royal protocol means she can only use it if her followers can find a way of curtsying in 140 characters?

The feed does give an insight into how boring the Royal's lives might actually be - opening wards and meeting factory workers - when they aren't having a bloody good time shooting and riding. However, as a PR initiative it breaks the rule that states for a Twitter account to be of any interest then tweets must emanate from the relevant horse's mouth, if you'll forgive the chimerical metaphor. If you can't have the lady herself, I don't really think there is much point in bothering. But that's not the point I'm here to make.

I'm more interested in the fact that, should any of us choose to, Bill Gates, Sir Ranulph Twisleton-Wykeham-Fiennes, 3rd Baronet OBE, Osama Bin Laden and I will have exactly the same experience when we use Twitter (assuming it's available in the relevant language).

I suppose Bin Laden might have quite a slow connection in Tora Bora, and probably Bill Gates has something faster than Tiscali's 2meg package. Details aside, everyone is doing the same thing.

Actually, not only will we be using the same website, we'll be using very similar devices. Bill probably doesn't have a Mac like me (he may be the richest man in the world, he can still envy me one thing), but all our computers will be very similar.

The reason for this is that for both websites and computer technology have very high development costs, and low marginal costs per user. Even the Queen can't afford to develop an iPhone, but everyone can afford to buy one.

If a lot of your life is mediated by technology then this is going to be very important to you. While there is healthy debate about the web's democratisation of publishing, I think we might reasonably add to the web's egalitarian reputation its ability to give people of disparate incomes identical online experiences.

That doesn't sound like a blow against inequality and tyranny in all its forms - but none the less I think its important . Even people using OLPC computers [low priced laptops aimed at the third world] have basically the same experience of the internet as you or I. That's to say Uruguayan children will quite possibly spend a good part of their day doing exactly the same things as New York's office workers and Korea's pensioners. When you consider that only very recently there were probably no major similarities in these disparate lives I think it does constitute a significant development.

Of course, for all I know a line of luxury websites will come along and exclude some strata of the social pile. In a way it's already happened - we've seen the thousand dollar iPhone app - but its hard to see this one off as part of a pattern. This is not to say that the 'freemium' business model [basic website for free, pay to get the premium version] couldn't exclude certain people, it's more that this model can only exist when there isn't much pressure for a free version. At the moment, there aren't any widely used web applications that aren't available at zero cost - of course this may change if your audience is sufficiently well off to attract paid advertising, but there again it may not.

This is a phenomena that's been observed before: technology tends to eliminate differences between cultures. It's been termed the Apparatgeist, and has been developed as a concept in response to the observation that mobile phone habits, one differentiated locally, are now more or less identical in all developed economies. As a concept it surely applies equally as well to class and income - leaving us us in a more equal experiential world. And perhaps also a monoculture - but then isn't that entailed in the new equalities that so many internet optimists evangelise?

The queen tweets. She's commissioned a special jewel embossed netbook and a bespoke Twitter client with skinned with ermine and sable.

I made that up. For starters, she hasn't actually started tweeting - there is a generic royal feed which announces the various visits and condescensions of Britain's feudal anachronism, but nothing from miss fancy hat herself. Perhaps royal protocol means she can only use it if her followers can find a way of curtsying in 140 characters?